October 2, 2017

Won't Get Fooled Again

Oh Noes! The Sky is Falling!

It was relatively quiet, late one Friday afternoon, with many of the Gremlin employees gearing up for a long weekend filled with festivities, when all of a sudden alarms started blaring. Our service had decided to start serving 5xx errors, more or less indiscriminately, just as everyone was getting ready to call it quits. I'm sure you can imagine our surprise, considering nobody was monkeying with the database, pushing code to production or doing anything of real consequence. Nevertheless, our service was on fire, and in desperate need of a little TLC, so we spun up a call and hopped on the offending instances.

After a relatively brief bout of log diving Philip Gebhardt was able to suss out the issue: log rotation wasn't working properly and as a result the disk had run out of space. Face, meet palm. It seemed ludicrous that something so trivial could go unnoticed to the point where it interrupted our blissful slide into the weekend. We rotated the instances in the Auto Scaling Group and made a promise to ourselves that we wouldn't get bit by this again.

Fool Me Once

Now I've made similar promises before, just to watch them fall by the wayside as priorities shifted. But at Gremlin, we vehemently believe in never failing the same way twice. After all, how would it look if we weren't willing to practice what we preach?

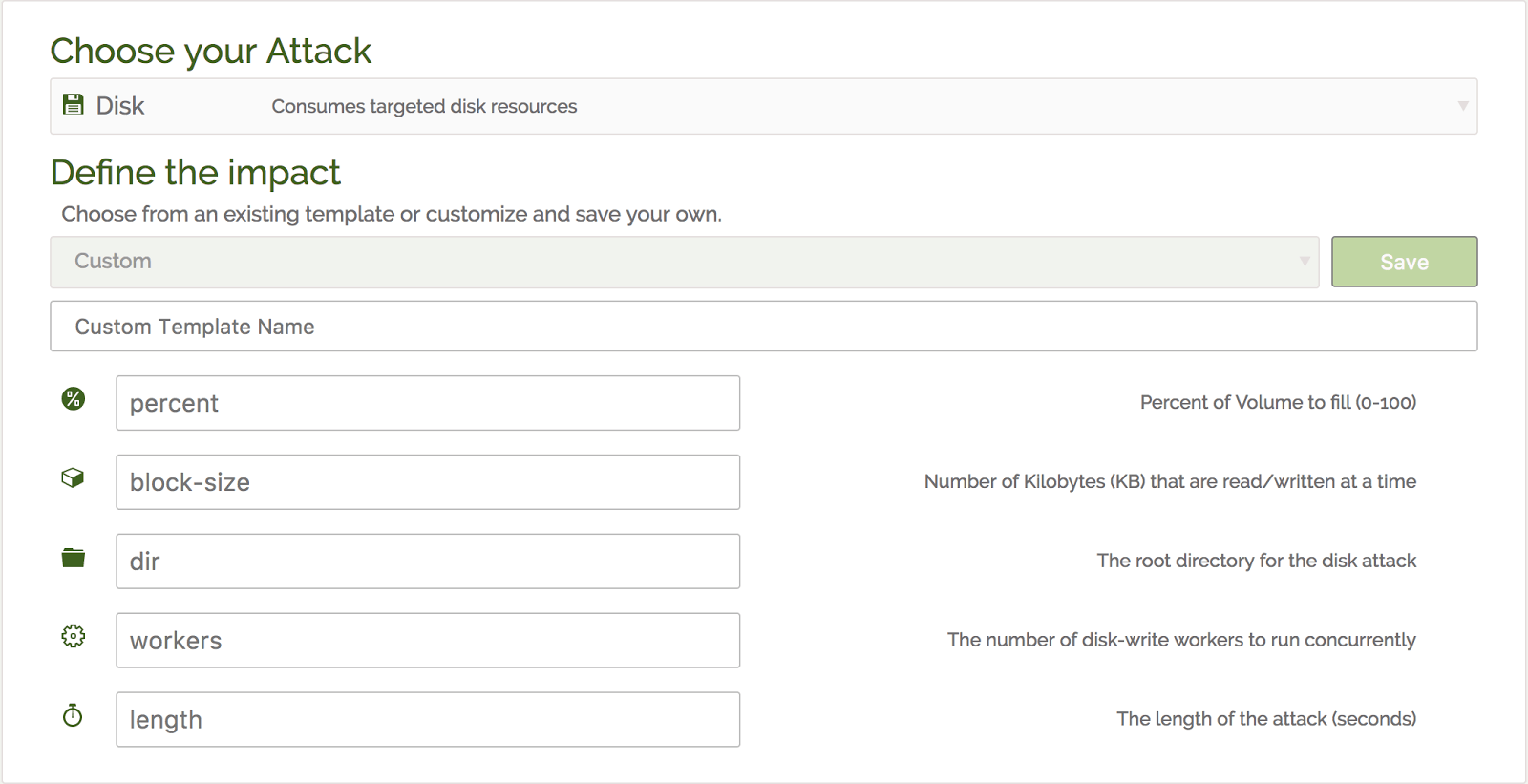

Given our profound level of combined experience in systems engineering, it's no surprise we're huge proponents of the unix philosophy. As such, each Gremlin is a single-purpose task, meant to replicate a real world failure mode. Since disk exhaustion was an unprecedented failure mode for us, Matt Jacobs, our newest engineer, found himself with an initiation task, writing the Disk Gremlin (seen below).

Instead of sitting back on our haunches and crossing our fingers that our newly created mitigation steps would work when called upon, we went proactive and decided to make sure there was no way they wouldn't.

What TODO?

Easier said than done, right? It doesn't have to be. Ensuring you don't suffer from the same failure mode more than once boils down to four main steps.

- Experiment -- During the experimentation phase, we toyed with several different approaches to ensuring that a full disk doesn't mean an angry API. We ultimately landed on publishing custom disk utilization metrics to CloudWatch (via mon-scripts) and triggering an EC2 Stop Instance Event when disk utilization is approaching 100%. NOTE Of course, this still leaves us susceptible to bugs that constantly overload our disks, so throwing an alarm to engage human investigation after several successive rotations was layered on as a catch all.

- Implement -- We then baked the monitoring and alarming into our build process, to ensure that all future deployments come with full insight into disk utilization.

- Validate -- After our first deploy, I ran a one-off attack using the newly created Disk gremlin, to ensure that the alarm fired, the service instance terminated and that the ASG replaced it with a shiny new instance, as expected.

- Automate -- But the real magic lies in turning the one-off attack into a scheduled attack which runs daily, at random, against our production service. During business hours of course. In doing that, we ensure that we don't become susceptible to a full disk as our code base and architecture continue to mutate. If we ever suffer a regression, the Disk gremlin will proactively trigger said alarms and we'll be able to pinpoint the root cause of the regression.

Continuous Chaos

You may ask if that truly frees a system from drifting into failure, ultimately ensuring we never see the same failure mode twice? The answer, for the most part, is yes. Incrementally building a knowledge base of failure modes, creating tests that exercise these situations and then constantly executing them against your system is akin to regression testing and Continuous Integration, but with a slightly different goal in mind. Instead of testing in a perfect world, where network calls respond instantly and resources are never in contention, you're simulating the real world, where chaos ensues and entropy abounds. We call it Continuous Chaos, and it's not all that different from many best practices you've likely already adopted.

I'm sure some of you are thinking, "That's all well and good Forni, but not all of us can regularly be slowing down network connections or pushing our disk to 95%, and DEFINITELY not in production" to which I say, "Why not?" It's going to happen one day, one way or another. Instead of dealing with disaster as you're counting down the minutes to a long weekend, wouldn't you rather it be on your own terms?

Interested in unleashing a little hell at your company?

Feel free to shoot us an email!